Hyperion system¶

Using GPU accelerators¶

Basic submission script for GPGPU capable applications:

Generic batch script requesting 1 GPU

#!/bin/bash

#SBATCH --qos=regular

#SBATCH --job-name=JOB_NAME

#SBATCH --cpus-per-task=1

#SBATCH --gres=gpu:1

#SBATCH --mem=90gb

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=1

module load program/program_version

srun binary < input

You can request up to one NVIDIA RTX A6000 two NVIDIA RTX 3090 and eight NVIDIA A100 per node in the general partition. In order to do that you can adjust the corresponding line in the batch script:

#SBATCH --gres=gpu:2

#SBATCH --constraint=rtx3090

or

#SBATCH --gres=gpu:8

#SBATCH --constraint=a100

There are six different types of GPUs on Hyperion:

| Compute Node | GPU | How to request the GPU with SLURM |

|---|---|---|

| hyperion-[030, 052, 074, 96, 118, 140, 162, 182] | 2x NVIDIA RTX 3090 24GB | #SBATCH --gres=gpu:2#SBATCH --constraint=rtx3090 |

| hyperion-[253,282-284] | 8x NVIDIA A100 SXM4 80GB | #SBATCH --gres=gpu:8#SBATCH --constraint=a100-sxm4 |

| hyperion-[252,254,257] | 8x NVIDIA A100 PCIe 80GB | #SBATCH --gres=gpu:8#SBATCH --constraint=a100-pcie |

| hyperion-263 | 1x NVIDIA RTX A6000 48GB | #SBATCH --gres=gpu:1#SBATCH --constraint=a6000 |

| Compute Node | GPU | How to request the GPU with SLURM |

|---|---|---|

| hyperion-[255,256] | 8x NVIDIA A100 SXM4 80GB | #SBATCH --gres=gpu:8#SBATCH --constraint=a100-sxm4 |

| hyperion-[260-261] | 1x NVIDIA A30 24GB | #SBATCH --gres=gpu:2#SBATCH --constraint=a30 |

| hyperion-293 | 3x NVIDIA RTX 4090 24GB | #SBATCH --gres=gpu:3#SBATCH --constraint=rtx4090 |

As specified on the table above, the selection of a specific type of GPU can be done by adding the corresponding --constraint to the submission script.

If --constraint=a100 is specified, either type of A100 can be assigned for the job.

Warning

Less than 4 NVIDIA A100 SXM4 per node can not be requested on the general partition

Selecting microarchitecture with GPU constraints¶

When you request GPU resources through the gres option in your SLURM script, you are allocated a node with the specified type of GPU. The current configuration is such that:

- Nodes equipped with NVIDIA RTX 3090 GPUs have Cascadelake microarchitecture processors.

- Nodes equipped with NVIDIA RTX A6000 GPUs have Icelake microarchitecture processors.

- Nodes equipped with NVIDIA A100 GPUs have Icelake microarchitecture processors.

- Nodes equipped with NVIDIA RTX 4090 GPUs have AMD Zen 3 microarchitecture processors.

Specific features for GPU nodes have been defined: gpu-cascadelake and gpu-icelake. However, specifying these features is not necessary when specifying a specific GPU model by using the --constraint option, as this provides the necessary information for node allocation based on GPU type and microarchitecture.

However, in case a specific microarchitecture is needed, while a concrete GPU model isn't, you can use these constraints to specify the needed microarchitecture:

#SBATCH --gres=gpu:x

#SBATCH --constraint=gpu-icelake

or

#SBATCH --gres=gpu:x

#SBATCH --constraint=gpu-cascadelake

Usage policy for the NVIDIA A100 SXM4 GPUs¶

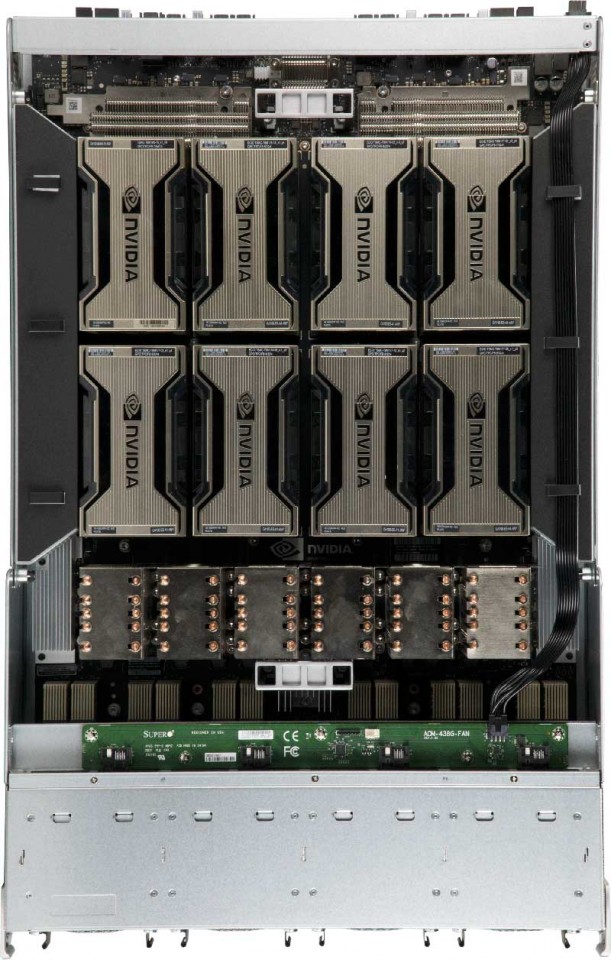

Taking into account that deep learning models are becoming increasingly complex and larger in size, and that there are more users in our clusters requiring more power and greater computational capacity supported by GPUs, we have acquired 4 nodes with 8 NVIDIA A100 SXM4 GPUs each for this specific purpose at the DIPC.

These nodes are equipped with NVIDIA's HGX computing platform, which allows NVLink interconnection between all GPUs within the same node, capable of transferring information at up to 600GB/s compared to the 64GB/s offered by GPUs connected via PCIe.

For this reason, jobs with fewer than 4 GPUs per node will not be eligible for the nodes equipped with these GPUs, as we consider their purpose to be very specific and it is important that they are available for computations that will truly utilize their potential.

Nevertheless, we have also implemented a special partition that allows jobs requiring fewer than 4 GPUs per node to be launched on these nodes in a special way: the preemption-gpu partition.

Special partition: the "preemption-gpu" partition¶

This partition includes all the nodes equipped with NVIDIA A100 SXM4 GPUs that are included on the general partition. There may be times when these nodes are not being utilized at 100%, while the other nodes equipped with GPUs are. Therefore, if a user wants to take advantage of this situation on an occasional and temporary basis, they can use this partition to submit jobs that require fewer than 4 GPUs per node to these nodes. Same as the preemption partition, it's behaviour is as follows:

-

When a job is submitted to the

preemption-gpupartition:- If the requested resources are available, the job will start running instantly.

- If the requested resources are in use, the job will remain in a pending state, same as on the

generalpartition.

-

When a job requesting more than 4 NVIDIA A100 SXM GPUs per node is submitted to the

generalpartition:- If the requested resources are available, the job will start running instantly.

- If the requested resources are in use by jobs submitted to the "preemption-gpu" partition, those jobs will be canceled and requeued to

preemption-gpu. - If the requested resources are in use by jobs submitted to the

generalpartition, the job will remain in a pending state.

The procedure to submit jobs to the preemption-gpu partition is as follows:

#SBATCH --partition=preemption-gpu

Different GPU priorities¶

When submitting a job that requires a certain number of GPUs but no concrete type is specified, the GPU assignment will be as follows:

| Partition | Requested GPUs | GPU Priority |

|---|---|---|

| general | 1 | NVIDIA RTX A6000 | NVIDIA RTX 3090 | NVIDIA A100 PCIe |

| 2 | NVIDIA RTX 3090 | NVIDIA A100 PCIe | |

| 3 | NVIDIA A100 PCIe | |

| >3 | NVIDIA A100 PCIe | NVIDIA A100 SXM4 | |

| preemption | 1-2 | NVIDIA A30 | NVIDIA A100 SXM4 |

| >2 | NVIDIA A100 SXM4 | |

| preemption-gpu | <4 | NVIDIA A100 SXM4 |